Table of Contents

I’ll start with a local wake-up call. In 2025, Deloitte Australia had to partially refund a government client after a major report was found to contain serious errors, including fabricated references and a made-up court quote, with the firm confirming it used a generative AI tool as part of the drafting process.

And it’s not a one-off. Courts are now regularly sanctioning lawyers for filing documents that include AI-generated “hallucinated” citations, because they didn’t verify the output.

Here’s the thing: AI isn’t “coming”. It’s already inside most SMEs, not because the board approved it, but because staff are quietly using chatbots, writing assistants and automation features to move faster.

Australia’s privacy regulator even calls out these exact tools (chatbots, content generators, productivity assistants) as common AI products now being deployed.

That’s why an AI policy has become urgent. The biggest risk for SMEs isn’t adopting AI. It’s adopting it without boundaries.

AI Has Shifted from “Tool” to “Risk Multiplier”

AI now touches everyday work: client emails, proposals, marketing copy, summaries, reporting, and increasingly, decision support. When AI is shaping what you tell a customer or how you handle their information, it stops being a “productivity tool” and starts becoming a legal and reputational risk multiplier.

In Australia, the Privacy Act can apply when AI involves personal information, and the OAIC is blunt about that. If your team pastes personal or sensitive client details into a public chatbot, the risk isn’t theoretical; it’s a compliance headache waiting to happen.

On the consumer side, Treasury’s review of AI and the Australian Consumer Law highlights the risks of misleading AI-generated content and how the existing consumer law framework is used to protect consumers. Treasury and the ACCC’s guidance on false or misleading claims is the simple standard that still applies: your business must be able to back up what it says. AI doesn’t change that responsibility; it just makes it easier to publish mistakes at speed.

In my world, I treat AI like a junior team member: helpful, fast… and absolutely not the final authority.

“We’re Too Small to Worry About This” Is a Dangerous Myth

I understand why SMEs say this: we’re busy, lean, and allergic to paperwork. But small businesses are often more exposed, not less.

Look at cybercrime alone: Australia’s Annual Cyber Threat Report shows the average self-reported cost of cybercrime for small businesses was about $49,600 in FY2023-24. The same report notes cybercriminals are adapting and leveraging AI to reduce the sophistication needed to operate.

Now blend that reality with “shadow AI”: an employee uses a random AI tool, uploads something sensitive, or sends an unverified AI-generated response to a customer… and suddenly you’ve got a confidentiality issue, a complaint, or a trust crisis, with fewer buffers to absorb the hit.

I’ve seen many businesses learn the hard way that “small” doesn’t mean “safe”. It often means the margin for error is thinner.

What an AI Policy Actually Does (and Doesn’t Do)

A good AI policy isn’t a 40-page monster. It’s operational clarity.

Australia’s National AI Centre literally provides an AI policy guide and template designed as a starting point for organisations that use AI.

That tells you something important: policy isn’t just for corporates; it’s now considered baseline good practice.

A practical AI policy answers the questions staff are already asking:

- Can I use AI for this task?

- What information is off-limits?

- When do I need human review?

- Who is accountable?

I’ve implemented three “non-negotiables” in my own governance at i4T Global:

- Data boundaries: no personal, sensitive, or confidential client info goes into public AI tools (aligned to OAIC guidance).

- Human review for risk: anything customer-facing, financial, legal, or reputational gets checked by a human before it leaves the building.

- Accountability sits with people: consistent with Australia’s AI Ethics Principles, humans remain responsible for outcomes.

This isn’t bureaucracy. It’s how you keep speed and control.

The Hidden Risk: Invisible AI Usage

One of the biggest threats I see is undocumented, informal AI use – good people doing “smart shortcuts” without realising the downstream risk.

Samsung’s experience is a famous example: reports say engineers uploaded sensitive material to a chatbot, which triggered internal restrictions and a crackdown. The lesson for SMEs isn’t “ban AI”. It’s: make usage visible and governed.

That’s why I prefer an “open door” approach:

- We allow approved tools.

- We train people on what not to share.

- We normalise saying, “Hey, I used AI for the first draft; can you review it?”

The OAIC’s guidance explicitly addresses freely available tools like public chatbots, which is exactly where invisible AI use tends to happen. Your policy doesn’t kill innovation. It brings it into the open, where it can be managed.

AI Governance Is Now a Leadership Issue

Here’s the uncomfortable truth: if AI is being used in your business and there’s no policy, no guidance, and no accountability, that’s not a technology gap. It’s a leadership gap.

The government has already moved on this. The Australian Government’s AI in government policy sets expectations around governance, operationalising responsible use, designated accountability, and risk-based actions. If that’s the direction public sector governance is heading, private-sector expectations (from clients, insurers, regulators, and courts) won’t be far behind.

And on the “what good looks like” side, Australia’s Guidance for AI Adoption lays out practices for responsible governance and adoption, explicitly linking responsible AI to trust and risk management.

In my own company, I treat an AI policy as a trust signal. It says: we’re not winging it.

What SMEs Should Do Now

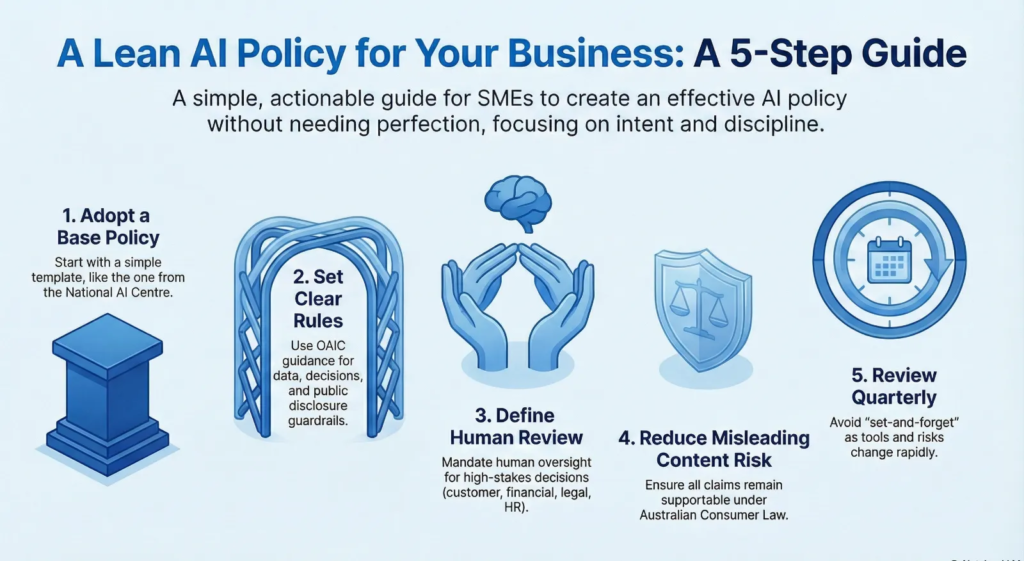

You don’t need perfection. You need intent and discipline. Here’s the lean approach I recommend (and use myself):

- Adopt a simple AI policy using the National AI Centre template as your base.

- Set clear rules on data, decision and disclosure using OAIC guidance as your guardrail (especially around personal information and public chatbots).

- Define human review points (customer-facing, financial, legal, HR decisions, anything high stakes).

- Reduce “misleading content” risk by reminding teams that Australian Consumer Law expectations still apply, and your claims must be supportable.

- Review quarterly, because tools and risks change quickly (and “set-and-forget” is how incidents happen).

If you do just those five things, you’ll be ahead of most SMEs, and you’ll dramatically reduce the odds of learning the hard way.

Over to You

AI is moving faster than regulation. That’s not new; we’ve seen it with every major digital shift. But trust is still the currency that keeps SMEs alive.

An AI policy isn’t about fear. It’s about protecting trust with customers, staff, and the future you’re building. And if you want a simple north star while you write yours, my latest book: Think Digital – Rewired for the AI Age is a genuinely useful anchor covering key concepts from human-centred values, leadership role, AI adoption roadmap to safe and ethical use of AI.

The businesses that win won’t be the ones that panic or pretend AI isn’t happening. They’ll be the ones who adopt it with intent, clarity, and responsibility.

Get your copy here: